Looking back … three years ago, GPT-2 was one of the most advanced language models on the market. Yet it would still get the easiest conversations wrong. It didn’t just hallucinate, it produced unstructured and nonsensical responses. Now ChatGPT (including the integrated GPT-4 model) can answer questions across an extremely wide field of topics, ranging from physics, biology, history, arts, engineering, sports and even entertainment. It has become good at reasoning, is creative, writes and understands code, and passes a lot of professional tests with better results than humans.

… and looking forward … if OpenAI, Google, Amazon, or anyone else manages another hat trick like the one we saw in the last three years, we could soon encounter an AI far superior to humans in cognitive performance.

… Uh oh…

Some tech celebrities like Elon Musk (Tesla, Twitter), Steve Wozniak (Apple) and Yuval Noah Harari (Sapiens, Homo Deus), along with tens of thousands of other signatories called for a pause, a moratorium a couple of weeks ago:

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4 […]

Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? […]

Society has hit pause on other technologies with potentially catastrophic effects on society. We can do so here.

Open Letter Page

What if there is no pause? Will AI systems be far beyond our intelligence in 2 or 3 years? And in 5 will it years reach the threshold of superintelligence — an intelligence that far exceeds that of humans in the vast majority of fields of interest?

Will AI attempt to dominate the world in 5, 10 or 20 years, maybe even exterminate us? Or is this just science fiction?

Many people seem to believe that SMI [Superhuman Machine Intelligence] would be very dangerous, if it were developed, but think that it’s either never going to happen or definitely very far off. This is sloppy, dangerous thinking.

blog.samaltman.com

Sam Altman, the CEO of OpenAI, the company that developed GPT-4 and ChatGPT, wrote these words many years before ChatGPT or even GPT-2 hit the market. Now, OpenAI is demanding an international body like the IAEA (the oversight on the use of atomic energy).

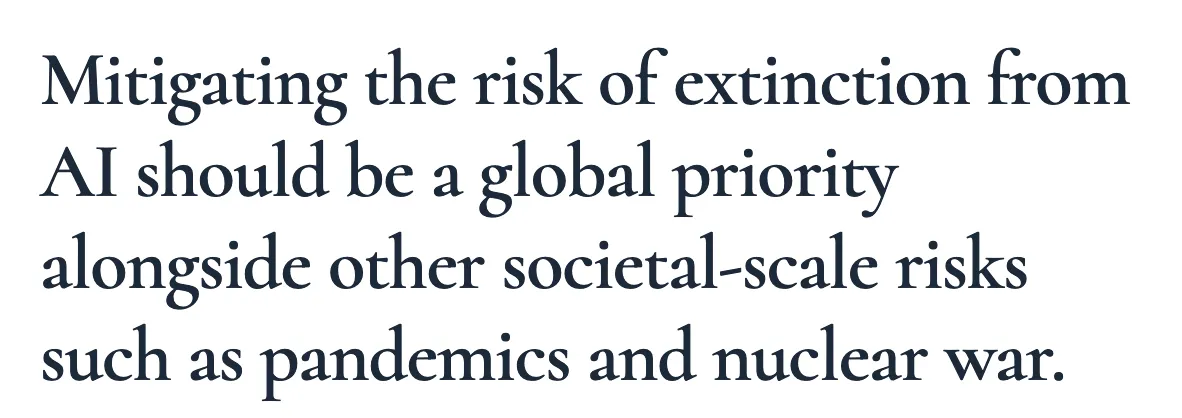

Altman, along with quite a few “notable figures” and a long list of AI scientists recently signed the following statement on AI risks:

Do AI believers completely overestimate the capabilities of their models in order to make themselves important?

Let’s look at the facts.

Superintelligence is not about some silicon-based smarter brother of Einstein able to bring the relativity and quantum theory together in an elegant single line equation. Rather, superintelligence is about an entity that has a similar relationship to us as we might have to ants in terms of intelligence. If this entity would bother to write down a brilliant thought, we would not even understand what genre it is.

When we look at how we, as one of the most intelligent species on Earth, treat other species (domesticate, slaughter, exterminate), how technologically or organizationally superior societies treat less advanced ones (rob, subjugate, enslave, destroy), it is at least an intriguing question how an intellectually superior being will treat us.

So, can superior machine intelligence really harm us? Or even wipe out life on Earth? I’ll try to split the question into four sub-questions and answer it:

☐ 1. Are the machines at least close to artificial general intelligence (AGI)?

A superintelligent image recognition, chess, music composition or chatbot AI will just lack the needed skills to take over the world. To do so AI would need the power to convince humans, to break into computer systems, build machinery & weapons, fold proteins and optimize itself (among many other skills). It would have to develop and pursue a long term strategy with multiple steps to be successful. If the AI is far away from AGI, there may still be risks from specialized superintelligent machines. But there is no evidence that it could take over.

☐ 2. Is machine intelligence really growing so fast that it can reach superintelligence in the foreseeable future?

While the first question is one regarding the breadth of the machine’s capabilities, this one is about the depth of intelligence, the performance of the machine compared to humans: How much better are they? If machines are likely to only match or slightly surpass humans in the next few decades, we need not worry about it now.

☐ 3. Will a superintelligent AI really want to dominate the world?

Aren’t these just bed time stories for data scientists? Why would an AI become evil? Can’t we build it to be nice or at least integrate some control that automatically pulls the plug when the piece of scrap gets sassy?

☐ 4. Is it possible for an AI to take over the world simply through superior intelligence?

Systems like GPT-4 already show an amazing level of intelligence. But they can’t even turn their own servers on or off. How on earth are they supposed to turn us off?

When we can check all four questions, then we have a problem. A big one – that would need to be solved in a short period of time.

1. GPT-4 & company: Are the machines at least close to an artificial general intelligence (AGI)?

We always try to reassure ourselves that we’re not there yet. AI is nowhere near as intelligent as humans. We tend to downplay all abilities for which you have to be really intelligent as a human and where machines have excelled, post-hoc as not really intelligent:

A system can perform a mathematical proof over hundreds of pages? “Not intelligent, just pure application of rules”. Recognize millions of people through their pictures? “Not very intelligent — my dog can do that and he doesn’t even need pictures!” Drive thousands of miles without an accident? “Uber drivers can do that as well — does that mean they are an intelligent life form?” Pass the American bar exam in the 10% percentile among many other tests? ”Not intelligent, just memorizing content available on the internet”, most Reddit users say.

These statements are a bit like the retreats of a chauvinistic species. The core chauvinistic or speciesist mantra is “When somebody else does the same thing we do — of course — it’s not the same thing, it’s something else”. On the other hand, there is a point here: AI shows excellence, but not broad intelligence like a human: a chess AI is dumb as a doughnut in other areas — unlike a good human chess player who typically can also answer an email, buy a few things for dinner, and recognize Magnus Carlsen in a photo.

An AI that wants to take over the world must be able to perform a wide range of human tasks just as well or better than a human. It must be able to handle world knowledge, plan, communicate in language, make inferences, understand visual information, and control other systems. Special talents like playing chess, driving a car, and doing mathematical proofs are not enough. At the moment, such a system does not yet exist. But from my point of view, we are on the way to full Artificial General Intelligence (AGI). Let us have a look at the performance of language systems.

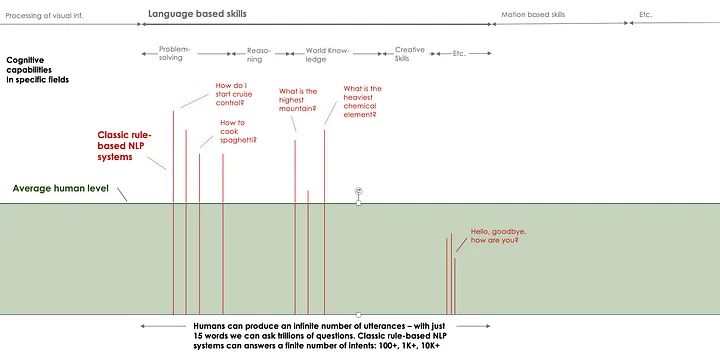

Classical rule-based chatbots have programmed intents — goals of the user that the system can process:

time_inquiry => output the current time

The user utterances mapped to this intent can be very broad:

Tell me the time? What time is it? Time of day?

Small systems, e.g., self-service machines, can process a few intents; a car, many hundreds. Siri, Google Assistant, Alexa– a few dozen or hundreds more (some of which are still parameterizable, i.e. that e.g. numbers, names or places in an utterance can change).

With such a system, even if you expand it year after year, you could never reach AGI level in the language domain. Humans can understand the infinite number of intents of the people they are talking to (even if they cannot answer all of them to one’s satisfaction). Even if you limit the maximum word count of an utterance to 15 words, there are trillions of intents that can be expressed. In other words, even if you expand the number of intents a system can process to 1000, 10K, 100K, you will hardly be close to processing them all.

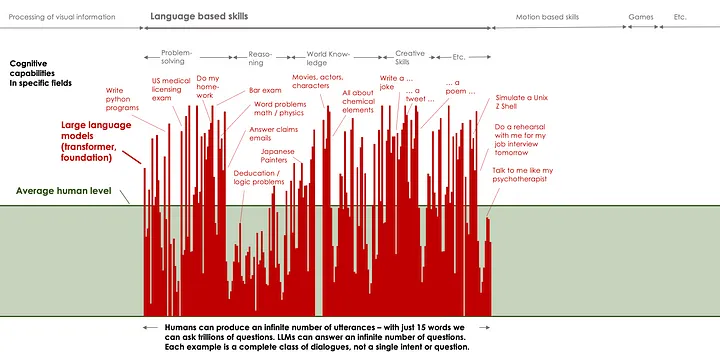

Large language models not only answer more questions. This is a new dimension in the scope of understanding — a little bit like the relation between whole numbers (integers) and real numbers. While a classic rule based chatbot can answer a finite countable number of intents, the LLMs can fill all the spaces between. They can in principle understand and answer all syntactically and semantically well-formed phrases — long and short — and even ones that aren’t well-formed.

And voilà, we are getting closer to true AGI. It’s not truly “general” in its intelligence just yet though, as it is still limited to language interactions. But language is the key cognitive field where human performance differs most from that of our fellow-mammals. It’s perhaps more apt to say that LLMs have reached the stage of Artificial Lieutenant General Intelligence (ALGI).

Just a short note — being able to understand and answer every question is not the same as being able to answer all questions correctly. The current key mantra of most LLMs is the old Italian saying se non è vero, è ben trovato — it might not be true, but it’s last well hallucinated.

If we explore other fields, such as image recognition and creation, spatial movement, game mastery (MuZero) or voice-to-text translation, it becomes evident that we are making progress towards an ALGI across multiple domains.

The challenging part might already be behind us: the transition from solving a finite list of single problems (natural numbers) to controlling a complete problem space (real numbers).

It could be that the ability to switch back and forth between different and highly capable domain ALGIs is so simple that platforms only slightly more advanced than Auto-GPT or BabyAGI would be able to do so in a few months. These autonomous agents build an agent layer using the models as granular reasoners for solving individual steps in a longer task.

To be frank, we’ve reached a point where AGI is not yet fully realized but clearly within reach. So, unfortunately, our answer to the first question is yes– we are almost there:

☑ 1. Are the machines at least close to AGI?

☐ 2. Is machine intelligence really growing so fast that it can reach superintelligence in the foreseeable future?

☐ 3. Will a super-intelligent AI really want to dominate the world?

☐ 4. Is it possible for an AI to take over the world simply through superior intelligence?

2. Explodential Growth — Is machine intelligence really growing so fast that it can reach superintelligence in the foreseeable future?

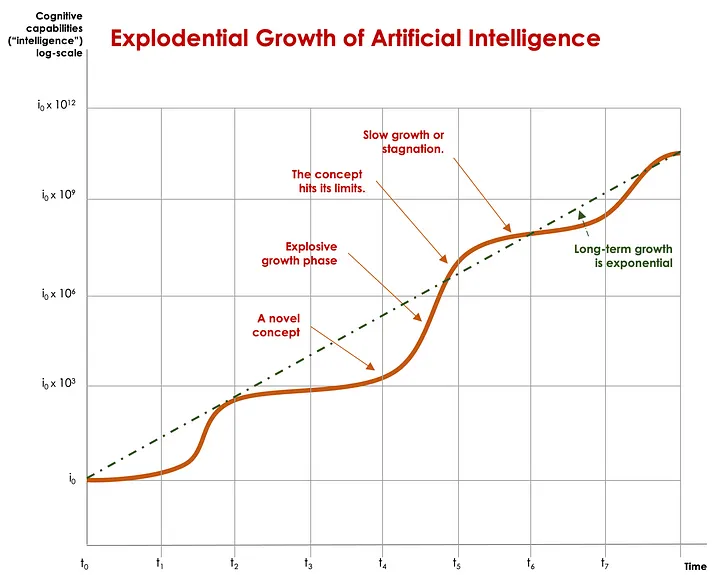

The development of artificial intelligence is quite different from, say, the performance of microchips, which shows diminishing exponential growth — according to Moore’s law). We cannot measure intelligence growth precisely because we have no general measure of machine intelligence (the following quantitative statements in particular are not bulletproof).

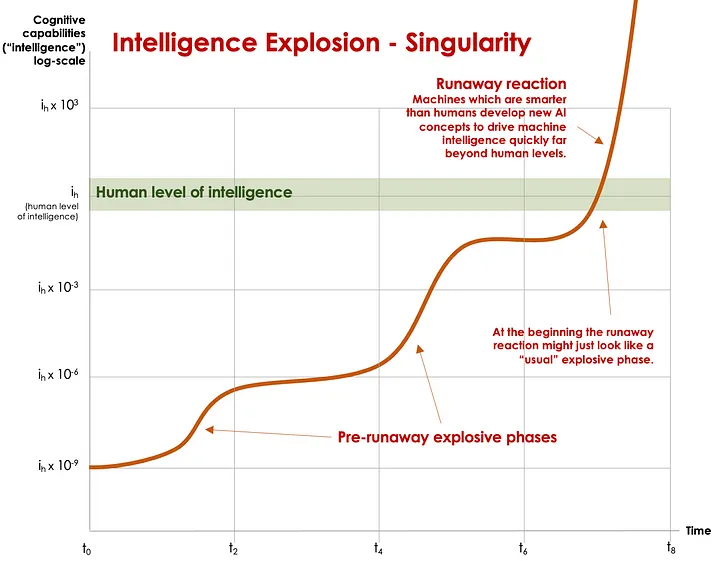

In the long term, the growth of the “I” in AI is exponential, but there are always phases of slow or stagnant growth when AI or a subfield of it has reached the limits of current approaches. The acceleration phases happen when new concepts such as perceptrons, deep learning, CNNs, self-play reinforcement learning, transformer architecture or foundation models are invented and implemented. Then, automated iterations of learning, exploring, adding resources, and optimizing often kick in, making systems much better in a very short time. In addition, tangible successes replenish the petri dish in which AI can flourish, attracting research dollars, fundings for startups, budgets in corporations or experts working in the field.

I use the term “explodential growth” because, in retrospect, it often looks like exponential growth. However, in the early stages, it seems to behave like a time bomb. Nothing or almost nothing happens for a very long time and we are inclined to say that it won’t go off — i.e. we will never reach this or that goal. In the past (prior to foundation models) the result from surveys in the AI community as to when AGI or Singularity will be achieved was usually a nice Gaussian distribution of answers over the next 50 or 100 years.It was essentially guesswork — there was no reasonably clear growth rate that could be extrapolated into the future.

Explodential growth has long applied less to AI as a whole, but often to subfields such as image recognition, chatbots or games. Two brief examples: AI developers had a hard time with the board game Go for several decades, despite DeepBlue defeating the chess world champion in 1997. Computer programs played Go only at amateur or intermediate level until 2015 when AlphaGo suddenly made a jump in progress that allowed it to beat the Dans(something like Go grandmasters) and eventually the world’s best player of Go. The follow-up AlphaZero then beat AlphaGo in Go and the best other AIs in Chess and Shogi in 2017 / 2018 with an extremely short training period.

All of this progress occurred extremely quickly, even though advancements in previous decades were minimal. The explosive growth phase built on concepts that were completely new (including self-play learning) — rather than the achievements made in AI game development decades before. My personal take-away is: Even if a problem seems unsolvable for machines over an extended time period, given a new approach and a couple of years, one could easily see machine intelligence offer solutions that are far beyond human conception.

In automatic speech recognition (ASR), we have an even more pronounced “dormant” phase just before the onset of explosive growth. As early as 1952, Bell Laboratories’ “Audrey” could recognize spoken digits. In the early 60s, the IBM “Shoebox” could recognize 16 words (arithmetic operators plus Audrey’s 10 digits) and (that was really a breakthrough) perform calculations. 10 years, 6 words more. ASR’s progress continued at this pace. Already about 60 years after the first developments, systems based on a new technology (DNN, Deep Neural Networks) were really able to reduce the error rate in the recognition so massively that we reach the current quality of recognition of spoken language and do not have to repeat the inputs three times, or train the system to our own voice. A super long time with not much tangible progress. Imagine if the World Wide Web, invented in 1989, would only give rise to applications such as search, online shopping, or wikis in the 2040s or 2050s.

Here again, Explodential Growth means that for long times it seems like we’re not moving at all (at least not in that specific field). It looks like AI has reached its limits. This phase is sometimes accompanied by an “AI Winter” period, characterized by waning interest and freezing of research funding (ie., Bell Labs, a leading institution of the time defunded speech recognition in the 1960s). Growth is achieved almost exclusively during explosive thrust phases. It is not predictable if and when a thrust phase will come and how much progress it will bring.

In the current explosive acceleration phase, which spans a wide range of domains, or at latest the next explosive phase, it is possible that intelligent machines will develop entirely new conceptual approaches. They may initially appear on a smaller scale, then avalanche into a larger scale, allowing other machines to become much better within a very short time. Then possibly kilo-better machines will develop more advanced concepts for future mega-better machines. This is a so-called Runaway Reaction according to John von Neumann or Vernor Vinge, which leads to an Intelligence Explosion, where progress rapidly out develops human intelligence. This is the last of the explosive thrust phases, which then eventually may only stop at constraints such as quantum sizes, the speed of light, or the expansion of the universe. Hey ho, Singularity!

If we assume that the growth of machine intelligence will not fundamentally change in the future, then we must answer the second question with a cautious yes — machine intelligence could potentially grow at such a rapid rate that it achieves superintelligence in a few years.

Importantly, we may not need a Runaway Reaction to check the second box — the usual growth of intelligence in this or the next explosive thrust phases should suffice.

☑ 1. Are the machines at least close to AGI?

☑ 2. Is machine intelligence really growing so fast that it can reach superintelligence in the foreseeable future?

☐ 3. Will a superintelligent AI really want to dominate the world?

☐ 4. Is it possible for an AI to take over the world simply by superior intelligence?

Head over to medium.com/@maximilianvogel for part 2!