The pace of development in the field of conversational agents and language models is accelerating rapidly. The new technological possibilities for interacting with machines via our voice raise new questions about the future of natural language interfaces. This is also accompanied by the question of how to teach machines to talk and understand natural language. We frequently invite various experts to ask what the future of natural language interfaces will look like and talke about how to add value for users and businesses. This time we were happy to have four professionals from different fields connected to natural language user interaction with us. We have summarized their talks here.

A Linguistic Approach to NLUI Output Design

How should conversations with voice assistance systems go?

And what are the exact requirements for their speech?

Until now, concrete linguistic considerations have received little attention in the design of language output. Yet language contains much more than content; how we express ourselves determines how we are perceived. The same is true for speech assistance systems. Large-scale user studies have shown that users have a keen ear for expressions and prefer certain phrases to others. For example, a voice assistant that uses active phrases is rated significantly better than a voice assistant that uses passive phrases. Findings such as these make it possible to generate concrete best practices and design guidelines for creating voice output that can be used by voice designers in everyday design processes and also serve as the basis for Natural Language Guidelines.

Not everything that works in human-to-human conversations also works in human-to-machine conversations.

Anna-Maria Meck, Phd candidate LMU Munich

What’s particularly exciting is that not everything that works in human-to-human conversations also works in human-to-machine conversations. What we perceive as natural in interactions with humans (and naturalness is one of the most propagated concepts in the design of human-machine interactions) can seem out of place in communication with machines – keyword Uncanny Valley.

Speeding up text analysis for the legal industry with explainable AI

While even our children are used to the virtual world as they are to the real world and digital innovation is everywhere, some areas are still painlfully non-digitized and a lot of unnecessary work is done by professionals, that could use their time smarter and so much more efficiently. A high paid group of these workers are lawyers. They spend a huge amount of time reading and comparing texts to find similarities or contradictions – which can be easily done by machines to safe time and money. It is important to show the law person, why and how a text is similar or contradictory in order to let them decide on the relevance for their case. This is why legal pythia uses explainable AI to help lawyers focus on the interpretation rather than doing Sisyphus work in text analysis.

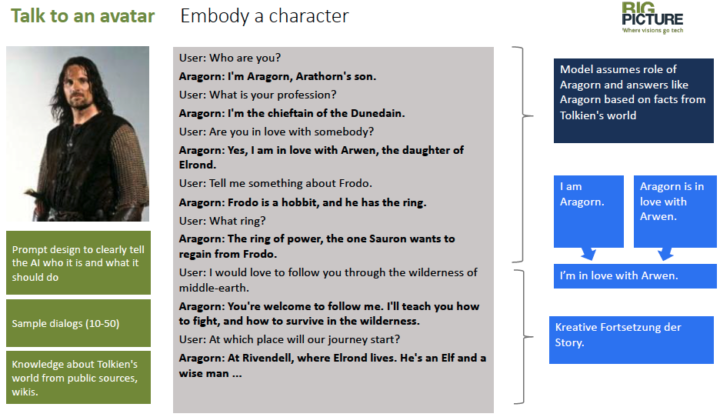

How do I make an object talk like Aragorn, like a car, or like my grandmother?

Artificial intelligence is advancing by leaps and bounds. At the moment, ML-based language generation is just reaching a turning point. So-called large language models (based on the Transformer architecture) are opening up new possibilities for human-machine interaction.

They make it possible to create virtual people who speak like a car, like a sneaker, or like movie heroes. The models are able to combine an instruction like “You are now Aragorn” with pre-existing written knowledge about Aragorn, his world, and his life. They speak in the first person as Aragorn and are able to use all the knowledge in dialogue or develop their story interactively with the user on new topics.

The models can talk to the user either by speech or by text. For setup, they typically require a lot of generally available material about the “person” (the history or career of a hero, the specification of a car, etc.) and a manageable set of training examples (10, 20, 50 sample dialogs) tailored to role-play. You don’t need scripted question-answer pairs like traditional chatbots.

This capability enables entirely new applications, such as truly natural talking cars or avatars that speak like a known person in the metaverse.

How to pass the Turing Test

Has the Turing Test become obsolete? In one word, yes. In fact, the first conversational app that fooled people into thinking it was a human responding was in 1966, using a technique used by therapists of asking open ended questions in response to statements.

Person: “I am sad”.

Computer: “Why do you think you are sad?”.

Then came the Loebner Awards for the most human like conversation, starting in 1990 and finally terminating in 2021. But the real break in Humanizing AI© came at the Google keynote speech in 2018 when they played a recording of Google AI placing a phone call to a hairdresser and making a haircut appointment. It clearly was trying to fool the human into believing it was human with bits of humanized speech, like “um-hum”, and a little laugh interspersed in its synthesized voice. Others soon followed and then voice cloning became real, and used in movies, like “Top Gun: Maverick” to replace the voice of actor Val Kilmer, who lost his voice because of cancer treatment. New startups like Puretalk.ai have taken Humanizing AI© to the next level using zero shot technologies and proprietary algorithms for responding to out of scope questions and in scope, with the most human sounding synthesized voices ever.

Much like the Speech to Text industry has grown from “not quite good enough” to an accepted mode of computer input, the natural language and speech synthesis technologies have grown to be so good that it can routinely fool humans.

The Turing Test is obsolete.

Welcome to the future.

The future of natural language interfaces

Some might compare the current landscape around Natural Language Development to the Wild West. In recent years, not only have the language models grown many times over, but access to them is easier than ever. Development is happening on every corner.

The future will be phenomenal.

Brian Garr, Chief Product Officer Puretalk.ai

Soon, questions will have to be answered and concepts developed about how humanlike these systems should become – what do we want and what do we need? What regulations must be developed to protect people when machines soon become indistinguishable from people, friends and family members in their tone, sound and vocabulary? Particularly worthy of protection are older people, who have not yet come into focus as a user group. They, like people with visual impairments, can benefit particularly quickly from natural language systems. Our responsibility then is to raise awareness of biases that are hidden in the data sets. The work going forward will be to train language models with “good” datasets that are diverse so that AI becomes truly inclusive.

What does the future hold for NLP? Probably more and more things will talk to us soon, so the Internet of Things will expand, but also the skillset will change, how we develop and use language models. You won’t have to write scripts and define prompts anymore, because the language models will know the answers themselves. Sounds like science fiction? Maybe. That’s why we won’t be able to avoid defining new approaches to how we communicate with each other. A new way of communicating is coming and the future, whatever it looks like, will be phenomenal.

This text was written following the presentations and panel discussion at the event “The future of natural language interfaces – How to teach machines to talk and understand natural language”.

Special thanks to our speakers Anna-Maria Meck, Jeremy Bormann, Maximilian Vogel, Brian Garr as well as Anna-Katharina Rausch.