Many of us use GPT-3 or other LLMs in a SaaS way, hosted by their vendors. But how is it like to run a model of the size of GPT-3 in your own cloud?

It’s not trivial to set up, but super exciting to run your own model. Let me tell you how to start out and what outcome you can expect.

BLOOM – Is a transformer-based language model created by 1000+ researchers (more on the BigScience project). It was trained on about 1,6TB pre-processed multilingual text and it is free. The size of the biggest BLOOM model in parameters is 176B, approximately the size of the most successful language model ever, the GPT-3 model of openAI. There are some smaller models available as well, with 7b, 3b, 1b7, etc.

Why would you want to set up your own model when you can use the commercial models as SaaS like water from the tap? Among many reasons the main argument is complete data sovereignty – the data for pre-training and the data entered by the users remain completely under your control – and not under that of an AI company. And there is no real hosted SaaS solution for BLOOM yet.

Ok, so how do you do that?

Model Size

Our Bloom model needs about 360 GB of RAM to run – a requirement that you can’t get with just one double-click on a button with classic cloud hosting and also this is quite expensive.

Fortunately, Microsoft has provided a downsampled variant with INT8 weights (from original FLOAT16) that runs on the DeepSpeed Inference engine and uses tensor paralellism. DeepSpeed-Inference introduces several features to efficiently serve transformer-based PyTorch models. It supports model parallelism (MP) to fit large models that would otherwise not fit in GPU memory.

Here is more info on minimizing and accelerating the model with DeepSpeed and Accelerate.

In the Microsoft Repo the tensors are split into 8 shards. So on the other hand, the absolute model size is reduced and on the other hand, the smaller model is split and parallelized and can thus be distributed over 8 GPUs.

Hosting Setup

Our hoster of choice for the model is AWS because it provides a SageMaker setup for a Deep Learning container that is capable of initializing the model. Instructions for doing so can be found here:

Please consider just the Bloom 176b part, the OPT part is irrelevant for our purposes.

On AWS you have to find the right datacenter to set up the model. The required capacity is not available anywhere. We went to the east coast, to Virginia, on us-east-1.amazonaws.com. You have to get the instances you need through support, you can’t do it by self configuring. You need 8 Nvidia A100 with 40 GB RAM each.

Thomas, our devops engineer found out how to create the environment and he finally set it up. I’d like to take a deep bow to him here for succeeding!

Boot the model

The hosted model can be loaded from the Microsoft repository on Huggingface into an S3 in the same data center – that is what we did, in order to have the model close to the runtime environment – or you can use the model provided by AWS in a public S3 environment. The model size is 180GB.

In the instructions and in the jupyter notebook available on Github you will find the individual steps for creating the model and setting up an endpoint on Sagemaker that are necessary to get the model with low latency running.

Done. The BLOOM 176B model is running now.

In our setup it costs about $32 per hour when running. So it can make sense to boot the model for test rounds and shut it down again to free up resources. With the script you can start it in about 18 min, shutting down and freeing the resources takes seconds.

Use the model

We have to put a custom API gateway and lambda function in the interface on top of the Pagemaker endpoint that allows users to connect externally with an API key – this makes it easier to use and call. Look here for an intro.

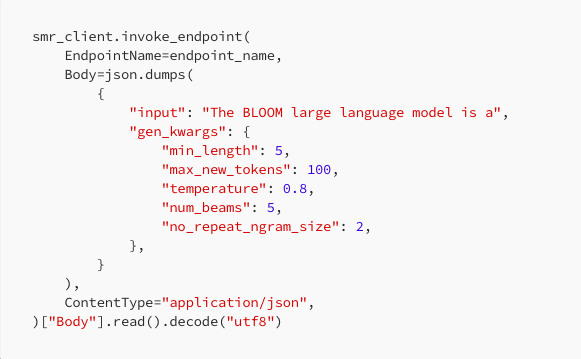

The “call to the Bloom model” is basically exactly the same as other completion models: you enter a text, temperature, max_new_tokens, etc. and get a text response.

First we tested the model interface with a smaller BLOOM model, then with the 1B7 (with SageMaker JumpStart) and then with the large 176B model – it worked.

Well, what is the result?

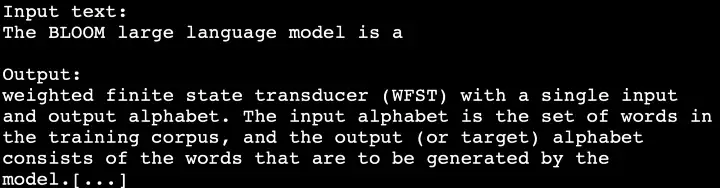

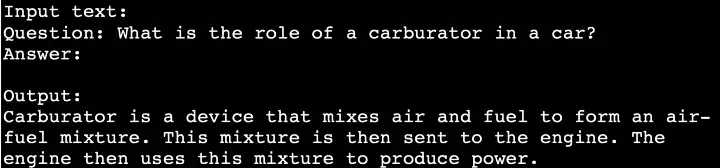

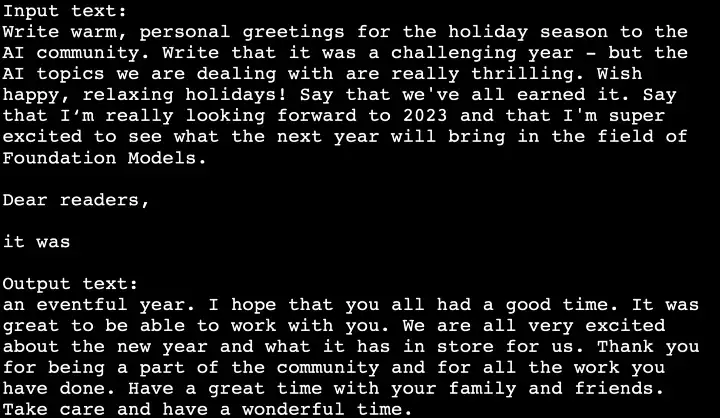

We let the model do two hello-worlds and one goodbye-world:

It works!

Nice, but not very specific. I will report on the results of some ability tests with the BLOOM model in a follow-up article.

Many thanks, ganz, ganz lieben Dank an: Thomas Bergmann, Leo Sokolov, Nikhil Menon and Kirsten Küppers for help with the setup and the article.

All bloomy images generated with OpenAI’s DALL-E2.

Please feel free to ask me questions regarding the BLOOM setup.

Read the full article on medium.com/@maximilianvogel