This article is based on my presentation at the 5th Annual International Conference CURPAS on Autonomous Systems at the Center for Aeronautics and Astronautics (Zentrum für Luft- und Raumfahrt) in Wildau, Germany.

How do we interact with the machine sphere?

Many autonomous systems – for example, the bus that drives through Tegel without a driver, or the pizza delivery drone – currently still have a human attendant who intervenes if something goes wrong. This person can respond when passengers ask questions.

For the moment and for the proof of concept such an attendant is great. In the long term, however, the device must be able to explain itself. We can’t have complex devices driving around if they can’t interact with the environment.

How can such a user interface look like?

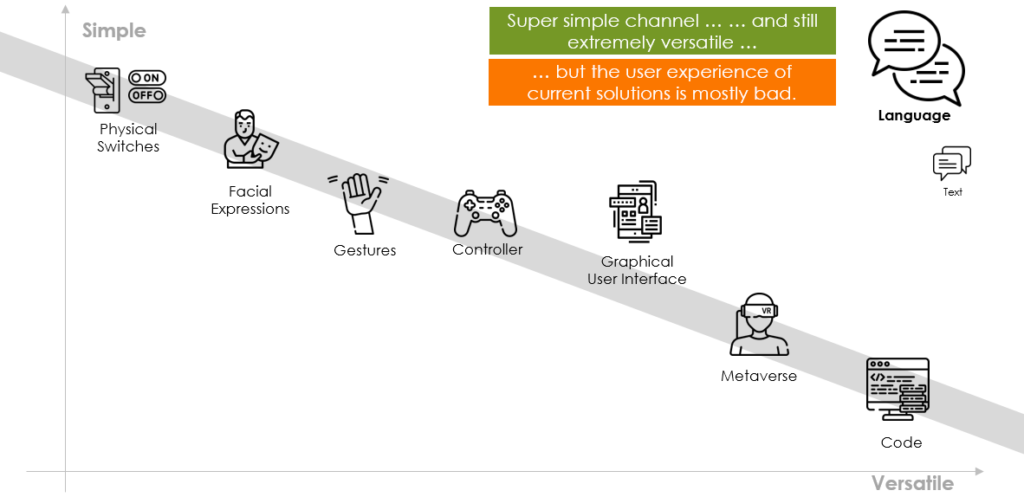

If we look at the main points of contact with machines, we see that the simplicity of the control methods decreases with the range of interaction, and communication becomes more difficult: I can turn a device on and off with a simple switch – but not very much more. I can’t trigger complex actions.

In contrast, we see a sweet spot in the use of speech: It can be used to trigger many interactions. At the same time, this type of communication is easy and natural for most people to use.

So why hasn’t voice control really caught on yet?

Many speech interfaces are not good enough in terms of quality and have a terrible user experience. The machines still have a hard time recognizing the user’s wishes. I don’t know what the thing can do, and I usually have to learn it the hard way: I’m frustratingly often told that it does not understand me.

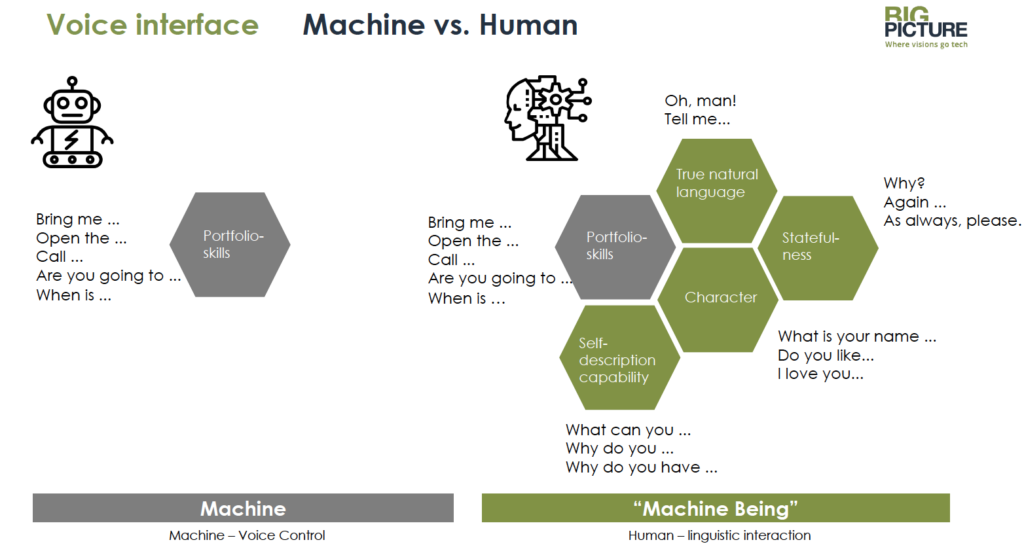

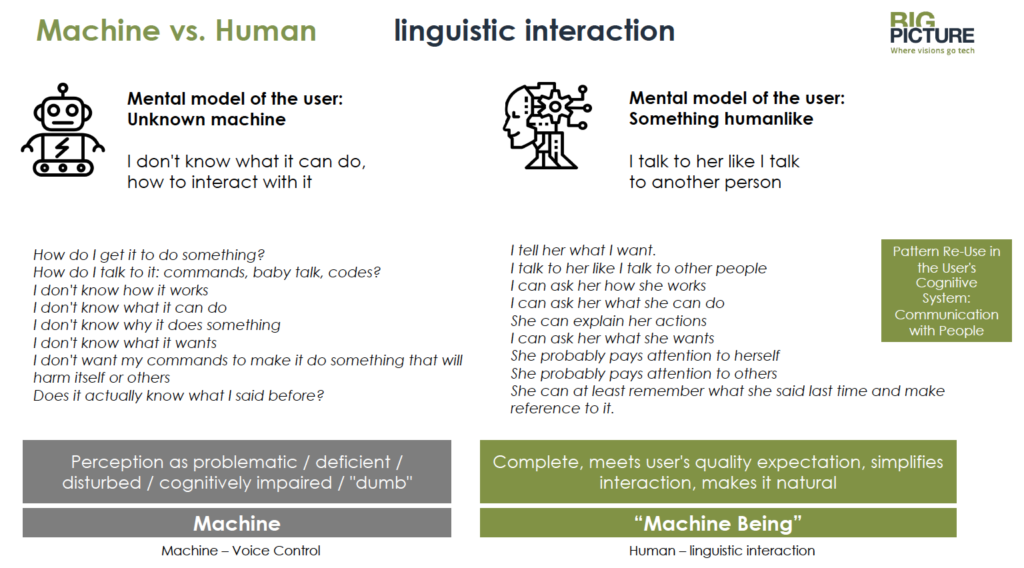

The first commercial speech applications were designed to look like a graphical user interface: The systems performed exactly the tasks that were stored in the portfolio. In the meantime, voice assistants have become much broader: The machines can be addressed more generally. Meanwhile, Alexa can respond to me if I tell her I love her. So voice assistants are evolving from voice automata to machine beings.

Why do we model a machine into a human-like person?

If a machine understands natural language and can converse beyond its portfolio skills, the uncertainty in communication is reduced: For humans, the machine is now no longer an unknown thing, but a specimen of a familiar category: I can talk to it like a human.

Xiaoice, is a speech system from Microsoft China, as an avatar, the system has been given the character of a young girl. Many people chat with the software for hours. It has more than 40 million users. This system is based on the Large Language Models.

What are these Large Language Models?

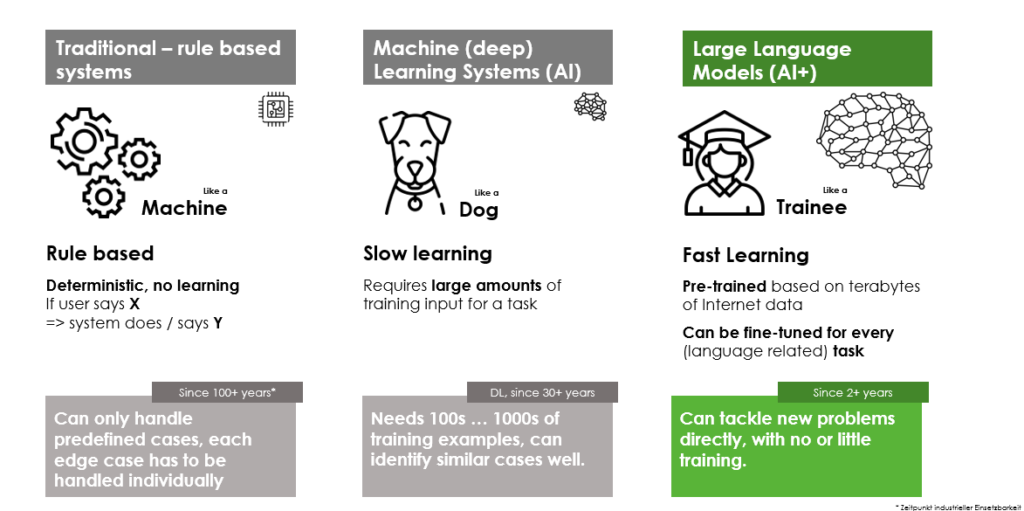

In Large Language Models (LLM), the system is pre-trained with several terabytes of Internet texts. Base on this, the model is able to speak in natural language and answer factual and logical questions. I’m having a conversation with someone who has read Wikipedia. Completely. And in all languages. Then, with specific training, I can tweak it for a specific task.

LLMs work best in English. This is because the LLMs are fed with internet data and most of the material on the web is in English.

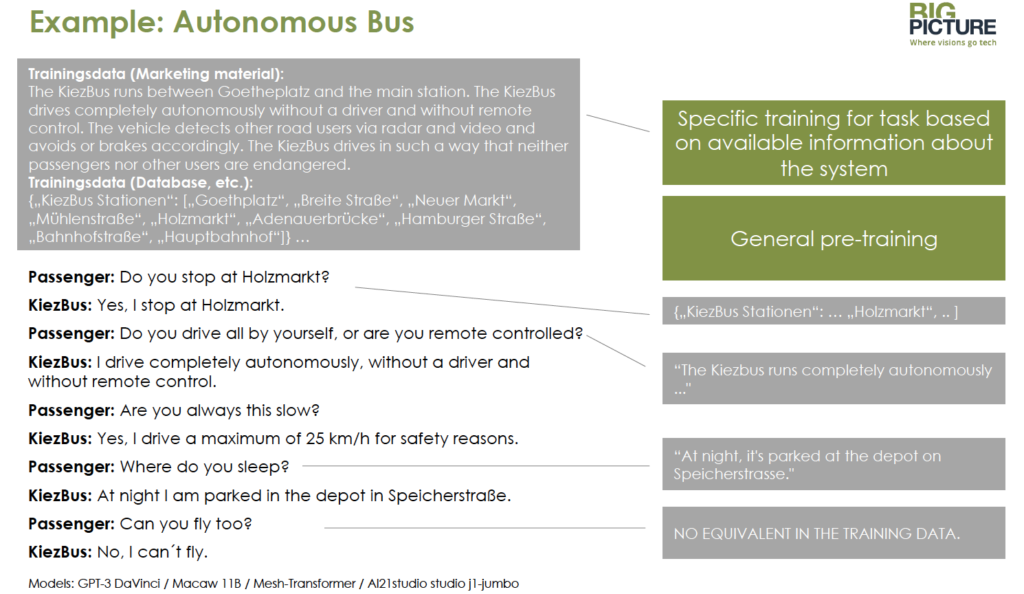

Here I have illustrated a specific training for an autonomous bus.

Based on the pre-training, I feed specific training data into the system, for example marketing and communication material as well as database content about stops or traffic times. Based on this, the bot can answer a lot of questions that are covered in this material.

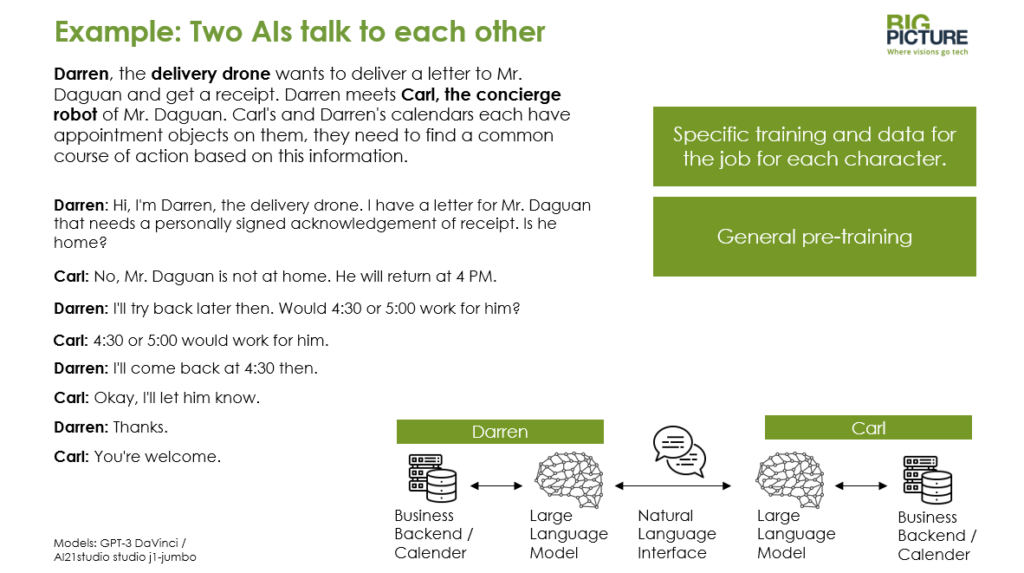

In my last example, we equip two autonomous systems with speech capabilities and let them loose on each other:

Delivery drone “Darren” wants to deliver a letter with acknowledgment of receipt, concierge robot “Carl” manages the appointment calendar of the letter recipient. Both machines have to find a common time slot based on their appointment calendar entries.

Negotiations are not super fast, but the machines are able to agree on an appointment slot. Two humans might have been able to get this task done in half the time. But for two machines without a defined interface, the result is quite remarkable.

What follows from this?

If we equip the world in the future with more autonomous systems in our public and private spheres, then we ensure that the new entities enrich our everyday lives. As understandable, pleasant, exciting interlocutors.

Many thanks to the CURPAS organizers, Prof. Dr. Uwe Meinberg and Dr. Christina Eisenberg for the wonderful event and the invitation!

Many thank also to Max Heintze, Kirsten Küppers and Hoa Le- Van Lessen for inspiration and support with the presentation and text.