How Will We Instruct AI in the Future?

Just as we finally started to master the art of prompt engineering after months and years, some people are already saying that we are riding a terminally ill horse.

They argue that prompt engineering, as we know it, will become irrelevant in as little as 6 months, maybe 2 years tops. The problem it addresses will then be solved in a completely different way, or will no longer exist — though opinions and ideas differ as to how and why exactly.

Let’s take a closer look at these arguments and assess their merits. Is prompt engineering merely a short-lived AI summer hit? Or is it here to stay? How will it change and evolve in the upcoming years?

What is Prompt Engineering and Why Should We Care?

A simple prompt like “ChatGPT, please write an email to my employees praising them for profit growth in 2023 and at the same time announce a significant reduction in staff in an empathetic way” doesn’t require much prompt engineering. We simply write down what we need and it usually works.

However, prompt engineering becomes crucial in the context of applications or data science. In other words, it’s essential when the model, as part of a platform, has to handle numerous queries or larger, more complex data sets.

The aim of prompt engineering in applications is usually to elicit correct responses to as many individual interactions as possible while avoiding certain responses altogether: For example, a prompt template in an airline service bot should be so well-crafted that the bot:

- can retrieve the relevant information based on the user’s question

- can process the information to give the user an accurate, comprehensible, concise answer

- can decide whether it is capable of answering a question or not and can give the user an indication where to find additional information

- never allows itself to get manipulated by users into granting discounts, free vouchers, upgrades or other goodies

- does not engage in off-topic dialogues about subjects such as politics, religion or compulsory vaccination

- Does not tell ambiguous jokes about flight attendants and pilots

- Does not allow prompt hijacking

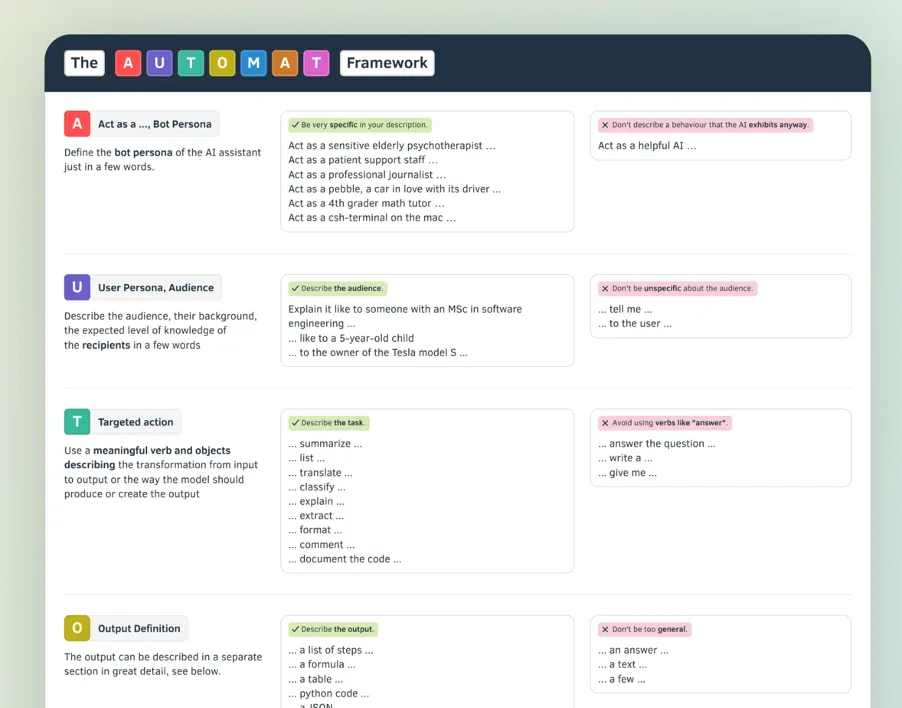

Prompt engineering provides the model with the necessary data to generate the response in a comprehensible format, specifies the task, describes the response format, provides examples of appropriate and inappropriate responses, whitelists topics, defines edge cases and prevents abusive user prompts.

Apart from a few key phrases, the prompt does not rely on magic formulas that trigger crazy hidden switches in the model. Instead, it describes the task in a highly structured way, as if explaining it to a smart newbie who is still unfamiliar with my company, my customers, my products and the task in question.

So Why is Prompt Engineering a Dead Horse?

Those who make this claim typically give several reasons, with varying degrees of emphasis.

The top reasons given are:

1) While the problem formulation is still necessary for the model, the actual crafting of the prompt — i.e., choosing the right phrases — is becoming less critical.

2) Models are increasingly better at understanding us. We can roughly scribble down the task for the model in a few bullet points, and thanks to its intelligence, the model will infer the rest on its own..

3) The models will be so personalized that they will know me better and be able to anticipate my needs.

4) Models will generate prompts autonomously or improve my prompts.

5) The models will be integrated into agentic setups, enabling them to independently devise a plan to solve a problem and also check the solution.

6) We will no longer write prompts, we will program them.

1) Prompt engineering is dead. But the problem formulation for the model is still needed and is becoming even more important.

The Harvard Business Review, for example, argues this way.

Yes, yes, I agree: The problem formulation for the model is really the most important thing.

But that is precisely the central component of prompt engineering: specifying exactly how the model should respond, process the data and so on.

This is similar to how software development is not about the correct placement of curly brackets, but rather about the precise, fail-safe, and comprehensible formulation of an algorithm that solves a problem.

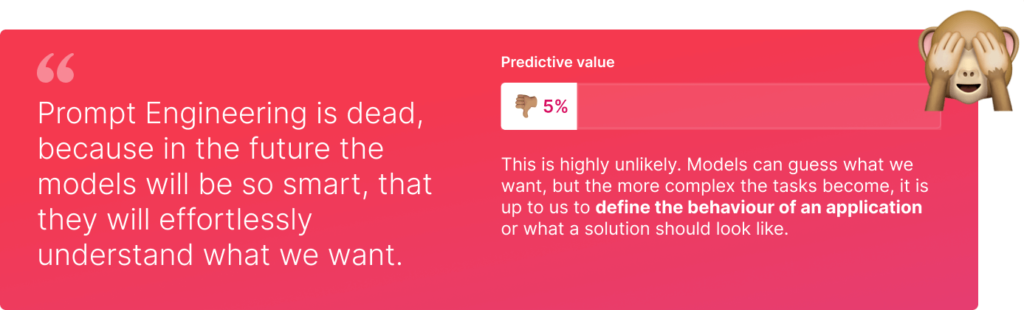

2) The models will understand us better and better. So, in the future, we will sketch out our task for the model in a few bullet points and the model will somehow understand us. Because it’s so smart.

Here is a Medium story on that. Here and here are some Reddit discussions.

I think we all agree that the capabilities of the models will evolve quickly: We will be able to have them solve increasingly complex and extensive tasks. But now let’s talk about instruction quality and size.

Let’s assume we have some genies that can solve tasks of various complexity: One can conjure me a spoon, the second a suit, the third a car, the fourth a house and the fifth a castle. I would probably brief the genies more extensively and more precisely the better they are, and the more complex the objective. Because I have a higher degree of freedom with complex items than with simple ones.

And that is exactly the case. I’ve been working with generative AI since GPT-2. And I’ve never spent as much time on prompt engineering as I do today. Not because the models are dumber. Because the tasks are much more demanding.

The only true thing about the above argument is that prompt engineering becomes more efficient with better models: If I put twice as much effort into prompt engineering in newer models, I get an output of a value, complexity, and precision that is probably closer to four times what I used to get with older models.

At least that’s how it feels to me.

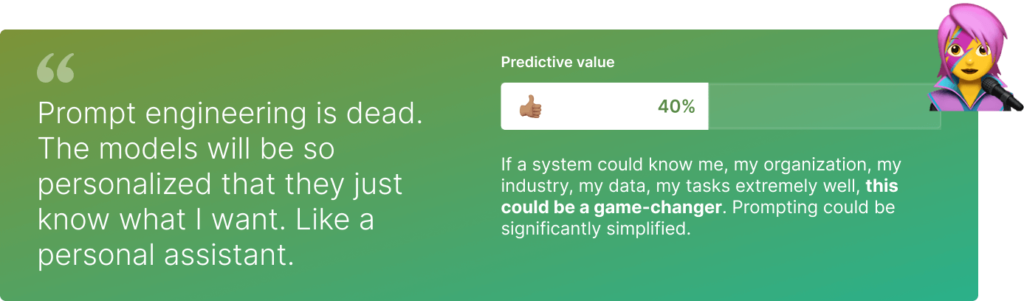

3) The models will be personalized and tailored to me. So they’ll know exactly what I want and I won’t have to explain it to them.

There’s something to this argument. When I ask a future model to suggest a weekend with my wife for our 5th wedding anniversary, it will already know that my wife is a vegan and that I prefer the mountains to the beach. And that we’re a bit short of cash at the moment because we just bought a house. I no longer need to say that explicitly.

But I don’t see the greatest potential for individualization in relation to a person and their preferences and tasks. Rather, it is in relation to organizations, their business cases, and data.

Imagine if a model could correctly execute an instruction like: “Could you please ask the Tesla guys if they have sent the last deliverables to the client and then check with finance when we can issue the final invoice?”

The smartest model in the world can’t execute this instruction because it doesn’t know the “Tesla guys” (a project for Tesla Inc? Or a biopic on Nikola?), the deliverables and who exactly “finance” is and how to reach them.

It could be a game changer if a model could understand something like this. It would just be a helpful fully skilled colleague. The difference between a valuable, experienced employee and a newcomer (in whom you would rather invest time) is often not in their broader domain knowledge, but in their contextual knowledge: They know the people, the data, the processes of my organization. They know what works, what doesn’t, and whom to talk to when things don’t work. An experienced model could have this contextual knowledge. That would be incredibly valuable: Working 24/7 for just a few cents per hour.

This would be useful not just for ad hoc jobs like the Tesla instruction above, but also in an application context, such as in setting up a structured AI-based system for processing insurance claims, for example: A model which knows how we in the claims department of our insurance company process customers emails could be a great help. For instance, it could assist in setting up algorithms and prompts for automated processing.

At present, it is not clear how a model could obtain this knowledge. Traditional fine-tuning with structured training material would certainly require far too much effort in terms of data provision. Letting the model continuously follow the conversations and messages of a team or department could be a possible solution. Maybe you have a better idea?

Even with a personal model, that doesn’t mean that I won’t need to do any prompt engineering. But I can let the model, or the system in which it is embedded, do a lot of the heavy lifting for me.

My dear AI aficionados and fellow prompt engineers: I am looking forward to an incredibly exciting future — one of which we do not yet know exactly what it will bring, except, of course, that it will continue to be extremely thrilling as well challenging.

You can read the full story here.

Many thanks to Almudena Pereira and Tian Cooper for support with the story.

Follow me on Medium or LinkedIn for updates and new stories on generative AI and prompt engineering.